Artificial intelligence, especially large language models LLMs, has really taken off and is changing the way people across different industries work. These tools save so much time and make tasks a lot easier, which is why so many people are using them now.

Due to the lack of permanent internet availability in many areas, such as Gaza City, where I live, due to the recent events, there is a difficulty in providing the internet permanently and continuously.

To overcome this obstacle, I searched for ways on how to use LLM offline without internet, and I found many ideas and methods. In this article, I will help you by sharing my experience step by step.

Let’s get started.

🛠 Step 1: Install Ollama

Ollama is a powerful runtime that simplifies the installation and execution of open-source LLMs on your local machine. It supports models like LLaMA 2, Mistral, and more.

🔽 Installation

For Windows / macOS / Linux, visit:

👉 https://ollama.com/download

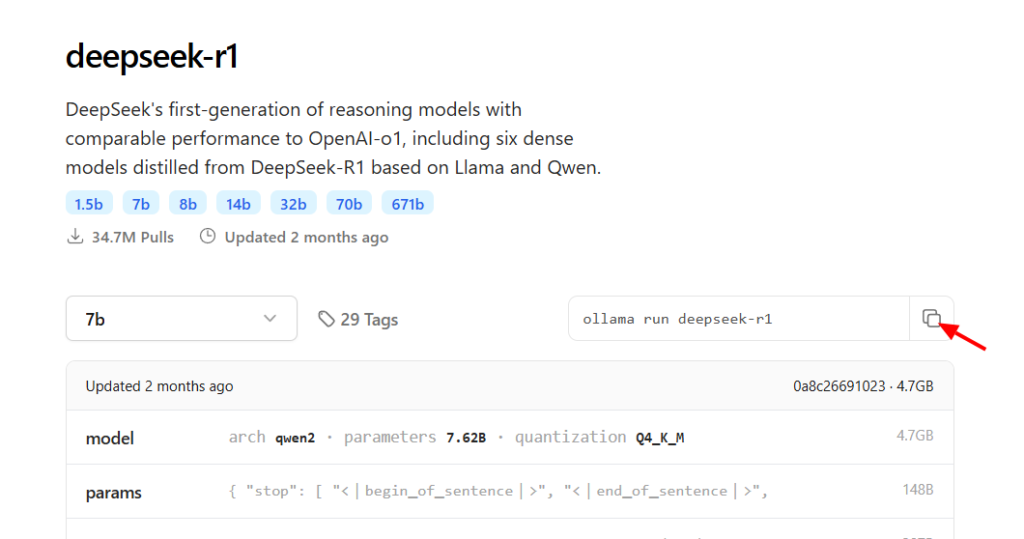

🧠 Step 2: Choose and Install an LLM Model

Ollama offers a growing catalog of pre-packaged models. Click here to view the list of the models, Click in any model and copy the command and paste it in the terminal

Example:

To download and start using Deepseek:

ollama run deepseek-r1This will automatically download the model and launch an interactive prompt. You can also try other models like:

💻 Step 3: Install Docker

To enable a graphical interface (GUI) for interacting with your LLM, we’ll use an OpenAI-style Web UI powered by Docker.

Installation:

- Windows/macOS: Download from

👉 https://www.docker.com/products/docker-desktop/ - Linux (Debian-based example):

sudo apt-get update

sudo apt-get install docker.io

sudo systemctl start docker

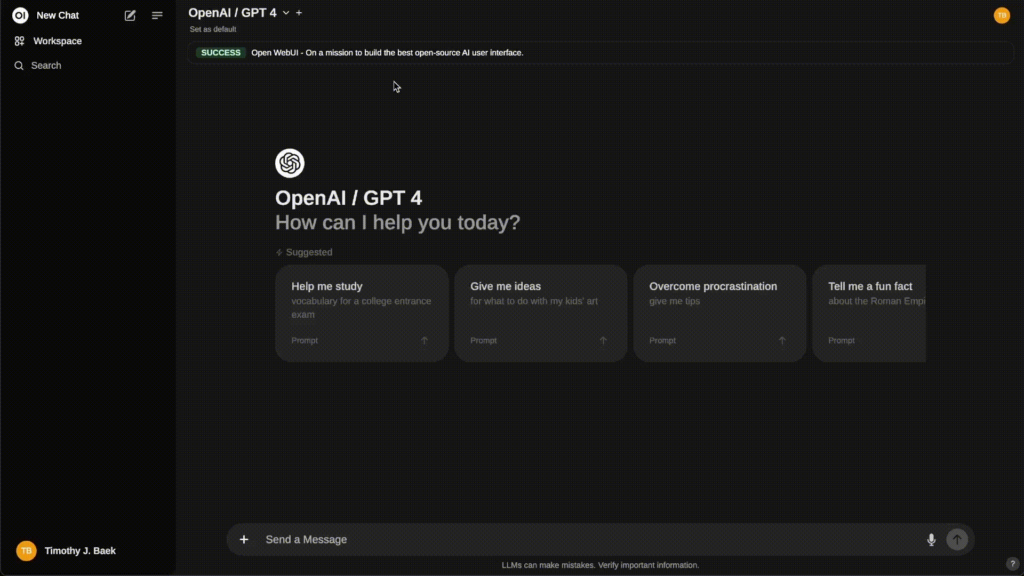

sudo systemctl enable docker🌐 Step 4: Install OpenAI Web UI with Docker

Now let’s set up a friendly browser-based interface to interact with the local LLM, similar to ChatGPT.

Run this command:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainThe web UI will be accessible at:

🔗 http://localhost:3000

It will automatically detect and connect to your running Ollama model.

🚀 Step 5: Run and Enjoy!

With both Ollama and Open WebUI running, you now have a powerful, private, and offline AI assistant.